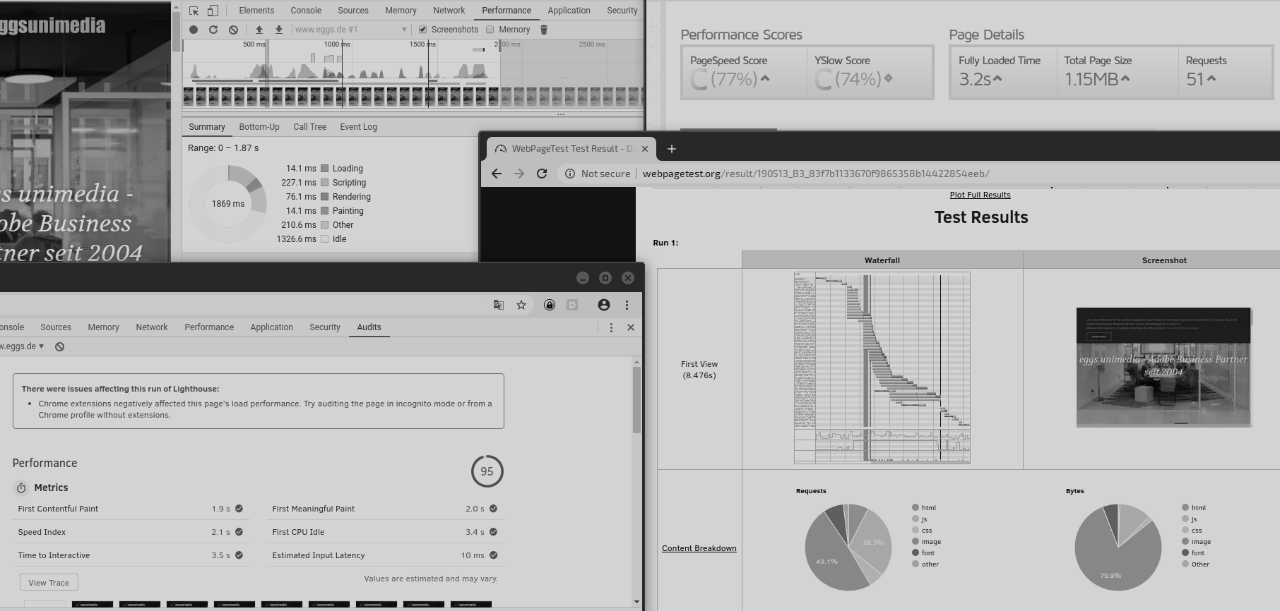

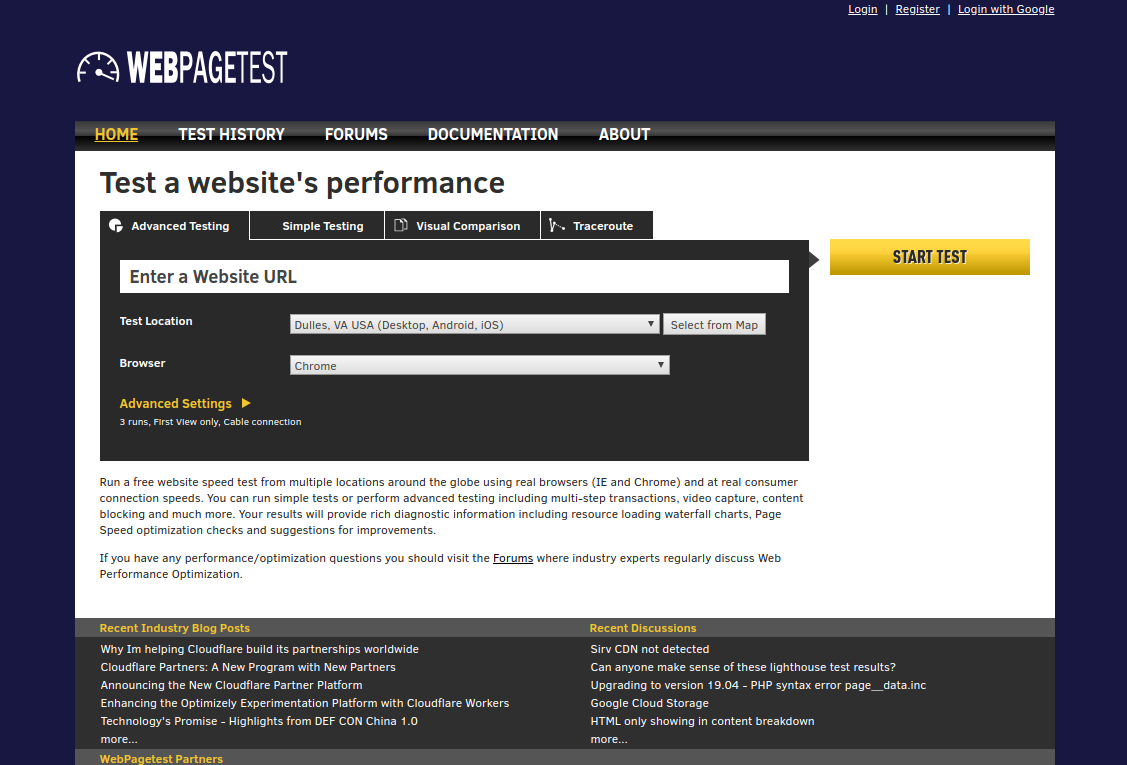

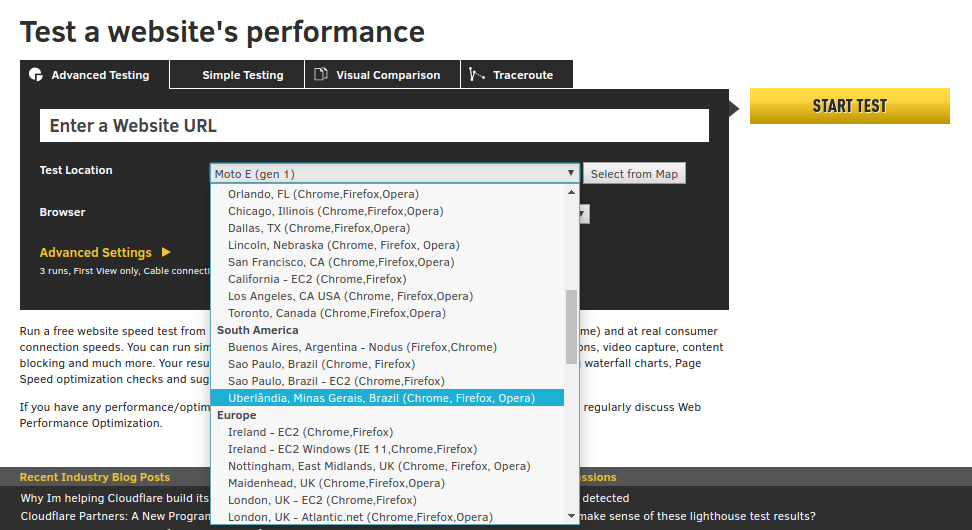

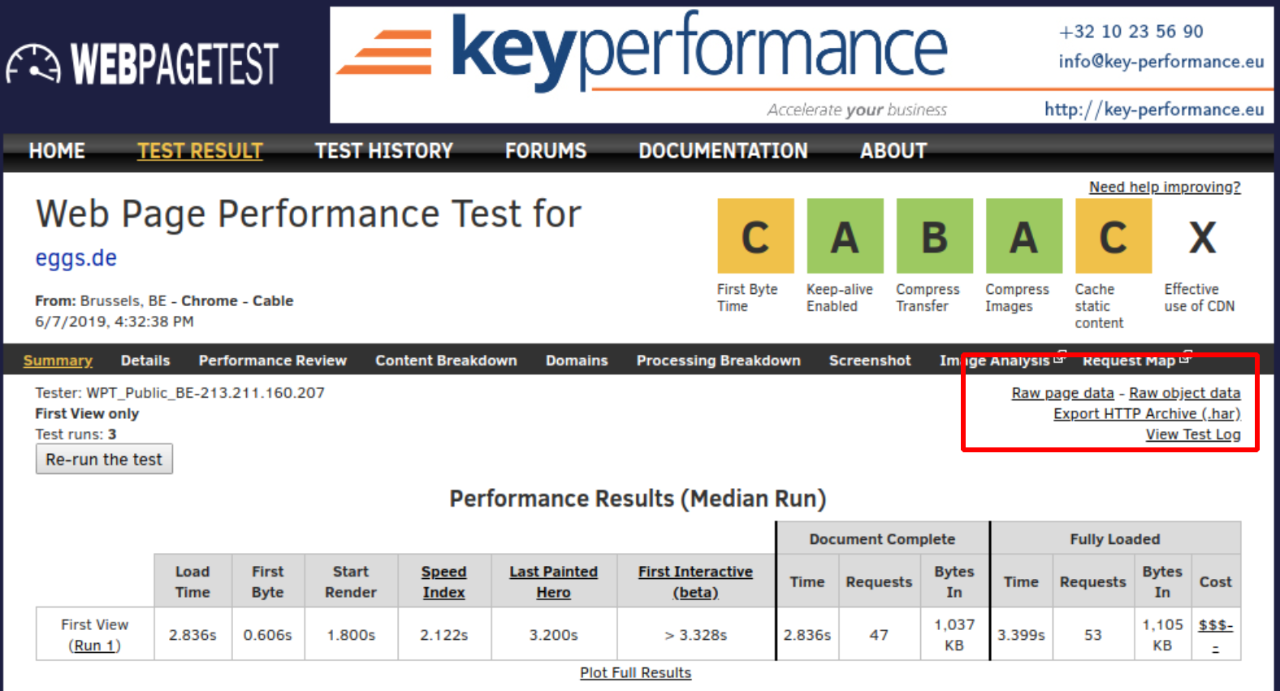

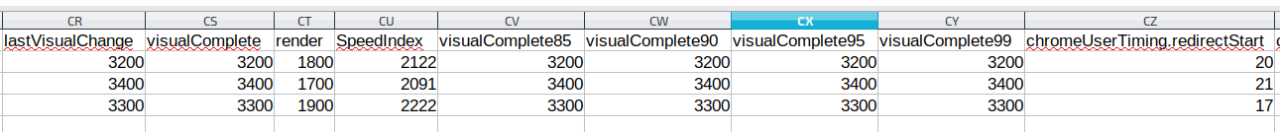

If you want to persist the values of the KPIs, you can export the test data as CSV: Click on "Raw Page Data" to start the download. The spreadsheet program then displays all the data collected for the web pages in extremely long rows. However, relevant fields such as visualComplete and SpeedIndex can usually still be found easily.

However, a certain data persistence, even if not controlled by the user, is given anyway when testing via webpagetest.org: In our test, old measurements were still available after more than a year. The data exports in .har and .csv format should therefore be made to be on the safe side, but keeping the links to the test results is also a good idea and should probably suffice as a reference over a longer period of time.

Monitoring

Due to the wide range of functions of the tools mentioned above, numerous data can be collected, which should be sufficient as a basis for discussion for most purposes. The clearly prepared comparisons of webpagetest with the competitors as well as the manual comparisons of past and current test results also provide a large and very helpful basis for analysis. However, the more complex a web application becomes and the more partners are involved, the more risk there is that unexpected peaks will occur that cannot be caught by selective measurements. If you want to establish a continuous monitoring of the site under performance and reliability aspects, you cannot avoid spending money. With the webpagetest API, you can certainly develop a great application yourself that continuously triggers measurements and presents the results in a visually palatable way.

But for this you definitely need at least time, knowledge, manpower and your own server. If you don't want to do this work, the already well-known tool GTmetrix also has variants that require registration but are free of charge, which have already done exactly that for you. When it comes to monitoring, however, one inevitably moves more and more in the direction of commercial providers who offer comprehensive packages ranging from simple monitoring and notification systems in the event of load time peaks or outages to entire teams that advise, develop solutions and, if necessary, even implement them.

The "Application Performance Management" platform Dynatrace should at least be mentioned here, whose scope of services ranges from synthetic and real user monitoring of frontend performance KPIs to the tracking of hardware key figures in AWS. The data can be linked to consulting offerings and visualized in configurable dashboards. Dynatrace's offering, as indicated, is vast and complex. Against this complexity and also the cost of the tool, the need for performance issues must of course be weighed.

The Calibre tool, which collects measurements and saves filmstrips at configured times, offers less functionality but also less complexity. If no additional manual effort is desired, this tool can also satisfy the need to visualize improvements - for example, only for an intermediate phase between several releases.

If you are looking for more tools, this article by keycdn is recommended: https://www.keycdn.com/blog/website-speed-test-tools, which presents some more interesting tools for evaluating performance. Especially the reports from GTmetrix and pingdom have proven to be clear, comparable and informative.

However, as there will continue to be a lot going on in the segment of web development and the optimization of their products, it is worth taking a regular look at new tools and developments.